Intel’s utilization of AVX-512 instructions aims to outperform AMD in certain AI workloads. The Xeon processors could leverage this advantage for specific tasks, though broader competition with AMD’s Epyc will be challenging.

To compete in the AI processing market, Intel has sharpened its focus on AVX-512 instruction sets, offering enhanced performance in artificial intelligence applications. This strategic move is part of Intel’s effort to utilize the high-level capabilities of its Xeon processors to surpass AMD’s Epyc in efficiency and processing power for particular AI scenarios.

AVX-512 instructions support advanced vector extensions that are instrumental in optimizing computational tasks and accelerating machine learning algorithms. Despite this advantage, Intel faces an uphill battle across broader processor workloads and markets. They must innovate relentlessly to stay ahead, as AMD continues to gain ground with its robust Epyc processors. The challenge for Intel is substantial, requiring persistent advancement beyond AVX-512 to maintain a competitive edge in the processor industry.

Credit: skatterbencher.com

Intel’s Leap With Avx-512

Intel powers up competition with its AVX-512 instruction set. This tech boosts data crunching for AI and scientific tasks. It’s Intel’s key to excel in specific scenarios. Their Xeon chips now work smarter with AVX-512. It is a game-changer for heavy workloads, like AI, over AMD’s Epyc. Yet, Intel knows it’s just a start in the full race with AMD.

The Evolution Of Vector Extensions

Intel has been improving its chips over time. Vector extensions are one big leap they made. These started as SSE and now we have AVX-512. They allow a CPU to process data in chunks, better and quicker.

Core Capabilities Of Avx-512

AVX-512 comes with cool features. It’s like a Swiss Army knife for CPUs.

- Speeds Up Math: It does complex math fast. This is a big deal for AI.

- More Data: Handles more data in one go. This means better performance.

- Energy-Efficient: Uses less power, which is good for big data centers.

Breaking Down Ai Workloads

Artificial intelligence (AI) powers many of today’s technological marvels. Understanding the tasks that AI workloads undertake clarifies the competition between Intel and AMD. Tool sets like Intel’s AVX-512 instructions aid in outperforming in certain AI scenarios, but what does that involve? Let’s dive into the specifics of AI workloads.

Vector Processing In Ai Algorithms

AI algorithms crave efficient data handling. The focus is on vector processing, crucial for operations like facial recognition and natural language processing. AVX-512 instruction sets shine here by allowing processors to handle large datasets with simultaneous computations.

By performing multiple calculations in a single clock cycle, Intel’s processors can accelerate complex AI tasks. This advantage is not about doing a single task faster—it’s about doing many tasks at once.

The Demand For Computational Power

AI’s thirst for power cannot be overstated. Each iteration and evolution demand more computational strength. In this sphere, AVX-512 has proven to be a trump card for specific Intel Xeon processors when pitted against AMD’s EPYC.

For instance, in image processing workloads that can leverage AVX-512, the performance jumps significantly. This highlights the importance of choosing the right tool for the job. AVX-512 is not a one-size-fits-all solution but where it fits, it provides a remarkable edge.

While Intel’s integration of AVX-512 bolsters them in particular AI challenges, the broader AI landscape remains highly competitive. To stay ahead, Intel must match this with consistent innovation across all AI workloads.

Amd’s Response To Intel’s Innovation

As Intel unveils AVX-512 instructions aimed at boosting AI performance, AMD is not standing still. The tech world is eager to see AMD’s counter-punch in this high-stakes AI arena. With Intel’s strategy to leverage Xeon’s specialized instructions for certain AI workloads, AMD prepares its response. The focal point of their plan is to enliven their AI acceleration capabilities. Let’s explore how AMD responds to this strategic move by Intel.

Amd’s Take On Ai Acceleration

AMD is harnessing the power of its existing technologies to bolster AI performance on its platforms. While Intel banks on AVX-512, AMD capitalizes on its own strengths with solutions like ROCm (Radeon Open Compute) for diverse AI workloads. This platform supports a wide range of machine learning frameworks. It integrates seamlessly with AMD’s GPUs, optimizing computational efficiency for artificial intelligence tasks. Here’s how AMD’s ecosystem stands against Intel’s specialized instructions:

- ROCm’s open-source nature encourages broad adoption and community-driven innovation.

- Direct GPU acceleration will enable rapid processing of large data sets crucial for AI.

- Cross-compatibility with popular AI frameworks like TensorFlow and PyTorch ensures flexible deployment.

Comparing Amd’s Approaches

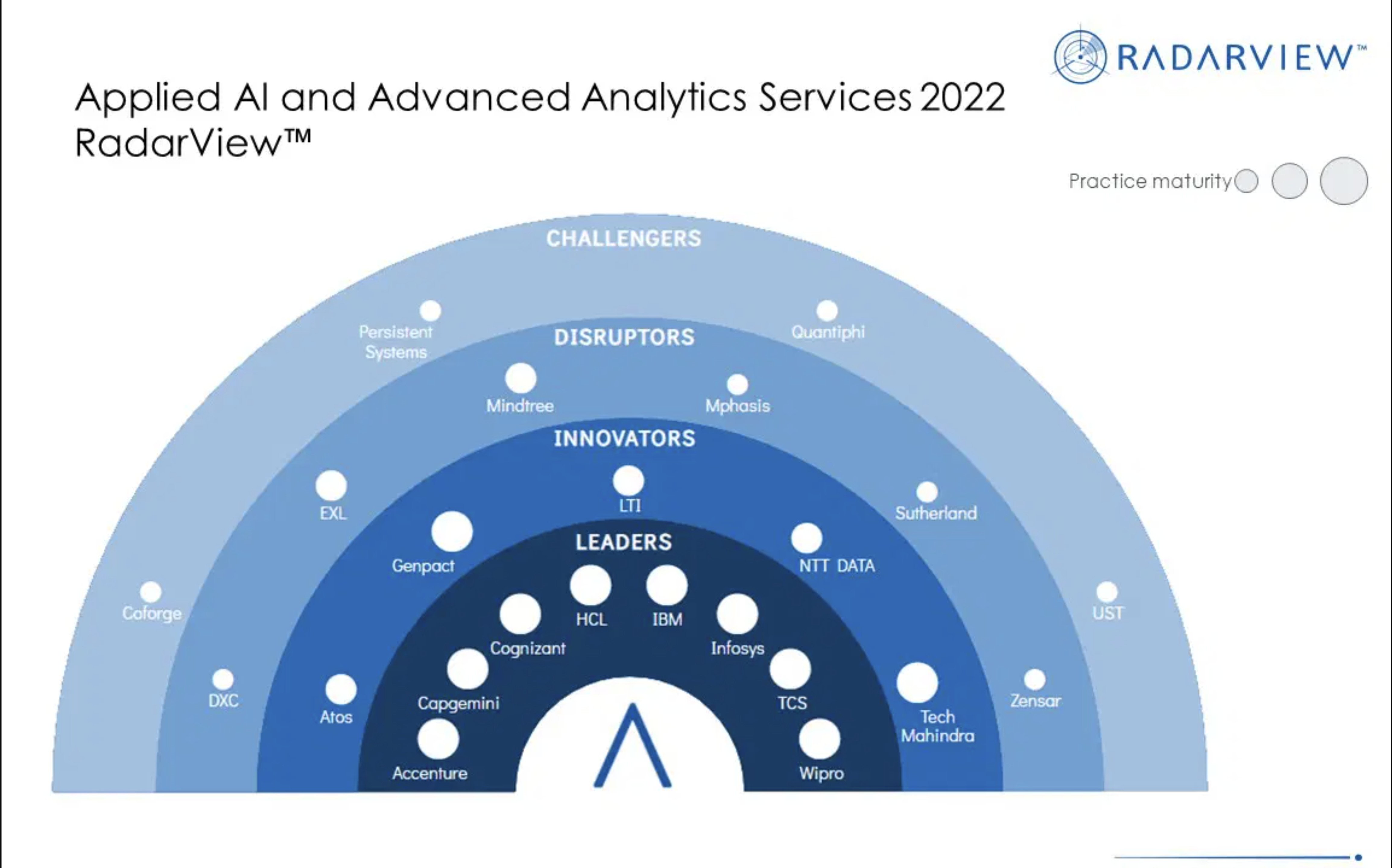

Eyeing the landscape, we compare the differing philosophies behind AMD’s and Intel’s approaches.

| Feature | Intel’s AVX-512 | AMD’s Approach |

|---|---|---|

| Instruction Set | Specialized | General Purpose |

| Integration | CPU-centric | GPU-accelerated |

| Support | Specific AI Scenarios | Broad AI Frameworks |

| Community | Limited | Open-Source Driven |

In summary, AMD favours a more holistic and flexible AI strategy over tailored CPU instructions. It aims to provide developers with a versatile toolkit to push AI boundaries across various workloads.

Credit: drsalbertspijkers.blogspot.com

Performance Benchmarks: Avx-512 Vs. Amd

Competing for the AI workload crown, Intel’s Xeon leverages AVX-512 instructions.

This high-performance vector processing capability aims to outperform AMD’s Epyc CPUs in specific tasks.

Synthetic Benchmarks

Experts conduct tests to compare Intel and AMD CPUs.

AVX-512 supports heavy computational tasks.

These synthetic benchmarks provide insights, but they don’t predict real-world performance perfectly.

| Test | Intel Xeon (AVX-512) | AMD Epyc |

|---|---|---|

| Matrix Multiplication | Higher scores | Competitive scores |

| Floating Point Operations | Peak performance | Good performance |

Real-world Tests

Actual AI workloads reveal true CPU capabilities.

- Image Processing: Intel shows an edge with AVX-512.

- Data Analytics: Both CPUs perform impressively.

- Deep Learning: Differences in frameworks affect performance.

Efficiency And Thermal Considerations

AVX-512 is powerful but consumes more energy.

Increased power leads to thermal challenges.

AMD’s chips offer impressive performance-per-watt figures.

| Metric | Intel Xeon (with AVX-512) | AMD Epyc |

|---|---|---|

| Power Efficiency | Lower efficiency | Higher efficiency |

| Thermal Output | More heat generation | Less heat generation |

Future Of Ai Chip Wars

The competition in artificial intelligence (AI) chips is heating up. Intel and AMD are in a fierce battle, with Intel’s AVX-512 instruction sets as a potential game-changer. These specialized instructions aim to boost Intel’s Xeon processors, enhancing performance in AI tasks. It’s a strategic move against AMD’s EPYC processors. The race is on to lead the AI hardware domain.

The Next Frontier In Ai Hardware

As AI workloads evolve, the demand for more potent processing capabilities skyrockets. Intel’s bet on AVX-512 may define the next era of performance in AI-centric computing. This technology is tailor-made for high-precision calculations, central to AI and deep learning applications. It represents a significant advance as companies seek specialized silicon that can handle increasingly complex algorithms.

Industry Implications And Market Trends

- Intel’s adoption of AVX-512 targets niche markets with high-performance needs.

- AMD continues its push for market share with EPYC processors, known for their versatility.

- AI-specific hardware could lead to market segmentation, with different chips for various tasks.

An understanding of these market shifts and trends is crucial for businesses and developers alike. The focus on AI acceleration emphasizes the importance of chip specialization, potentially reshaping the competitive landscape.

Credit: drsalbertspijkers.blogspot.com

User Experiences And Ecosystems

Tools and technologies shape user experiences in powerful ways. Intel’s leap into AVX-512 instructions promises advancements in AI workload efficiency. Yet, the true value lies in how developers harness this power and optimize software for peak performance, directly influencing the ecosystem of tools available to the end-user.

Developers’ Take On Avx-512 And Amd’s Tools

Developers play a key role in tech adoption. Their feedback on AVX-512 is vital. Industry forums and tech discussions shed light on developers’ opinions. Many praise Intel’s AVX-512 for its potential in speeding up computational tasks, while others remain cautious, citing the need for wider support.

- AVX-512 enables complex calculations.

- It supports deep learning and AI.

- Some developers worry about code compatibility.

Contrastingly, AMD’s ecosystem is lauded for its robust toolchain. Developers appreciate tools like ROCm, which simplifies GPU-accelerated computing.

Impact On Software Optimization

Software performance hinges on optimization. Developers optimizing for Intel’s AVX-512 see potential in targeted applications. Key programs can unlock performance gains by leveraging these instructions.

Ecosystem alignment is crucial. Software companies must weigh the benefits of optimization efforts such as:

| Intel AVX-512 | AMD’s Ecosystem |

|---|---|

| Improved AI inference speeds. | Broad GPU support. |

| Enhanced data analytics. | Developer-friendly platforms. |

| Selective application boost. | Comprehensive tools and libraries. |

Such strategic optimizations can leverage Intel’s strengths, enhancing user experiences where it matters most.

Frequently Asked Questions On Intel Bets On A Secret Weapon To Beat Amd In Some Ai Workloads Avx 512 Instructions Will Help Xeon Beat Epyc In Specific Scenarios But Intel Will Have To Work Much Much Harder To Worry Amd

What Is Avx-512 Used For?

AVX-512 is a set of CPU instructions that boosts performance for heavy computational tasks, such as scientific simulations, financial analytics, and 3D modeling.

Which Cpus Support Avx-512?

Certain Intel Xeon, Core i9, i7, and i5 processors support AVX-512. Specifically, Skylake-X, Cascade Lake, Ice Lake, Rocket Lake, and Alder Lake families include compatible CPUs. Users should check individual processor specifications for AVX-512 support confirmation.

What Is Avx-512 Reddit?

AVX-512 refers to Advanced Vector Extensions 512, a set of instructions used in high-performance computing tasks. Reddit discussions may focus on its impact on processor performance and compatibility with various applications.

What Is Intel’s Secret Weapon Against Amd?

Intel’s secret weapon to compete with AMD in AI workloads is the AVX-512 instruction set. This feature, found within Intel’s Xeon processors, can accelerate performance in certain scenarios, offering a competitive edge in specific AI applications.

Conclusion

Intel’s strategy to dominate the AI workload space hinges on the power of AVX-512 instructions, positioning Xeon as a formidable contender against AMD’s Epyc. Specific workloads where these instructions shine could give Intel the edge they seek. Nonetheless, this isn’t a guaranteed win, and Intel must intensify efforts in innovation to pose a significant threat to AMD’s market stronghold.